Mood-Driven Colorization of Virtual Indoor Scenes

Michael S Solah*1 Haikun Huang*1 Jiachuan Sheng**2 Tian Feng3 Marc Pomplun4 Lap-Fai Yu1

1George Mason University

2Tianjin University of Finance and Economics

3Zhejiang University

4University of Massachusetts Boston

*Equal Contributions

**Corresponding Author

Abstract

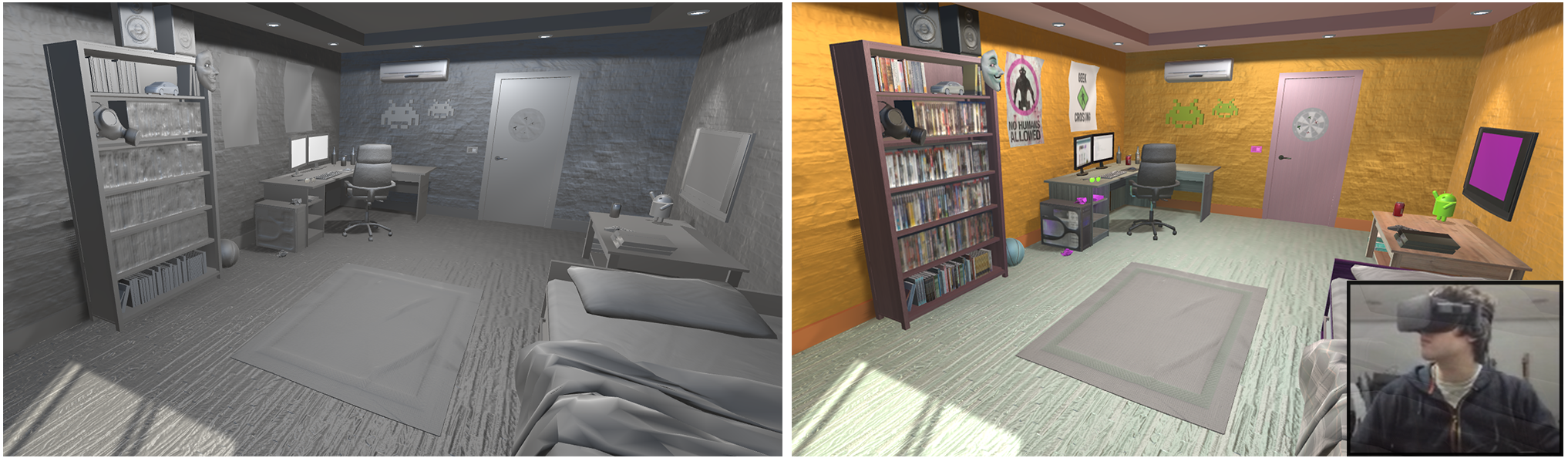

One of the challenging tasks in virtual scene design for Virtual Reality (VR) is causing it to invoke a particular mood in viewers. The subjective nature of moods brings uncertainty to the purpose. We propose a novel approach to automatic adjustment of the colors of textures for objects in a virtual indoor scene, enabling it to match a target mood. A dataset of $25,000$ images, including building/home interiors, was used to train a classifier with the features extracted via deep learning. It contributes to an optimization process that colorizes virtual scenes automatically according to the target mood. Our approach was tested on four different indoor scenes, and we conducted a user study demonstrating its efficacy through statistical analysis with the focus on the impact of the scenes experienced with a VR headset.

Keywords

Virtual reality, Perception, Visualization design and evaluation methods.

Publication

Mood-Driven Colorization of Virtual Indoor Scenes

Michael S Solah,

Haikun Huang,

Jiachuan Sheng,

Dr. Tian Feng,

Marc Pomplun,

Lap-Fai Yu

IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR 2022)

Paper,

Supplementary,

Video

BibTex

@article{moodvr,author = {Michael S Solah and Haikun Huang and Jiachuan Sheng and Tian Feng and Marc Pomplun and Lap-Fai Yu},

title = {Mood-Driven Colorization of Virtual Indoor Scenes},

journal = {IEEE Transactions on Visualization and Computer Graphics},

year = {2022}

}